Parallelisation and Amdahl’s Law

Understanding the limits of parallel speedup

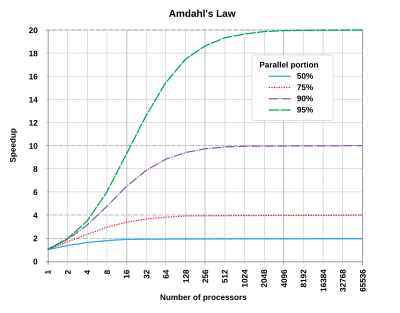

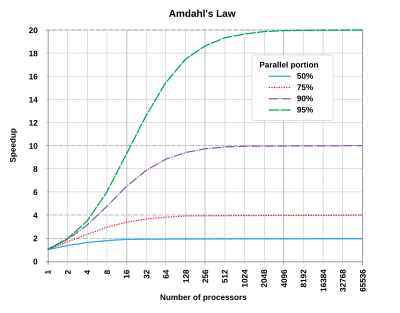

Amdahl’s Law

Amdahl’s Law graph Image source: Daniels220 Wikipedia, CC BY-SA 3.0, via Wikimedia Commons

Key Insights

- The greater the parallelised portion, the greater the speed-up by adding more cores

- There comes a point of diminishing returns where adding more cores has a negligible effect:

- If 50% of the job is parallelised, the greatest speedup is 2 times

- If 95% of the work is parallelised, the greatest speedup is 20 times

Parallelisation Strategies

The way in which you parallelise your work depends on the problem you are solving!

Task Parallelism

- Divide work into independent tasks

- Each processor works on different tasks

- Good for different types of calculations

Data Parallelism

- Same operation applied to different data

- Each processor works on subset of data

- Good for large datasets

Practical Implications

- Before scaling up:

- Profile your code to understand bottlenecks

- Identify which parts can be parallelized

- Consider the communication overhead

- When choosing resources:

- More cores ≠ always faster

- Consider memory requirements per core

- Balance cores vs. memory vs. time

Real Performance Example

Scenario: Image processing job with 1000 files

- Serial: 10 hours on 1 core

- 10 cores: ~1.2 hours (not exactly 1 hour due to overhead)

- 100 cores: ~8 minutes (if perfectly parallel)

- 1000 cores: Likely slower due to overhead!

Exercise

Return to the main notes and try to answer the questions!

Next Steps

Now you understand HPC basics!

Let’s learn how to actually connect and use the system.

HPC1: Introduction to High Performance Computing | University of Leeds